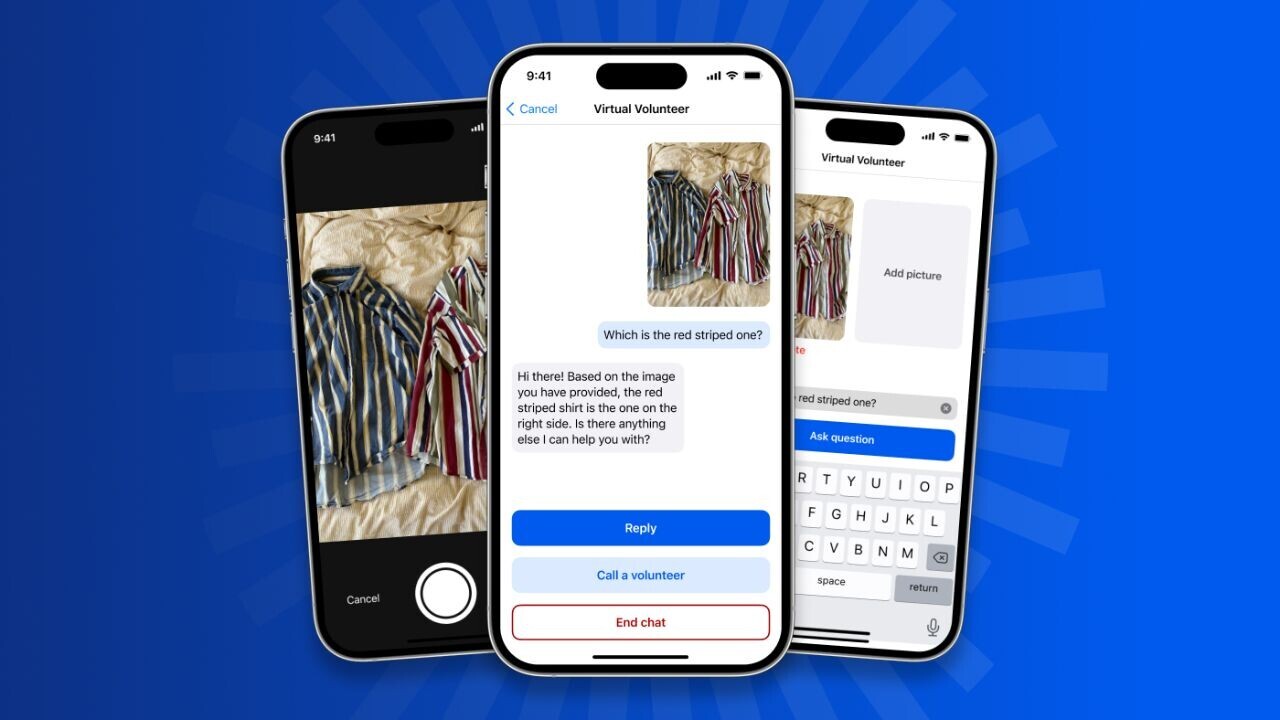

The first app to integrate GPT-4’s image-recognition abilities has been described as ‘life-changing’ by visually-impaired users.

Be My Eyes, a Danish startup, applied the AI model to a new feature for blind or partially-sighted people. Named “Virtual Volunteer,” the object-recognition tool can answer questions about any image that it’s sent.

Imagine, for instance, that a user is hungry. They could simply photograph an ingredient and request related recipes.

If they’d rather eat out, they can upload an image of a map and get directions to a restaurant. On arrival, they can snap a picture of the menu and hear the options. If they then want to work off the added calories in a gym, they can use their smartphone camera to find a treadmill.

“I know we are in the midst of an AI hype cycle right now, but several of our beta testers have used the phrase ‘life-changing’ when describing the product,” Mike Buckley, the CEO of By My Eyes, tells TNW.

“This has a chance to be transformative in empowering the community with unprecedented resources to better navigate physical environments, address everyday needs, and gain more independence.”

Virtual Volunteer takes advantage of an upgrade to OpenAI’s software. Unlike previous iterations of the company’s vaunted models, GPT-4 is multimodal, which means it can analyse both images and text as inputs.

Be My Eyes jumped at the chance to test the new functionality. While text-to-image systems are nothing new, the startup had never previously been convinced about the software’s performance.

“From too many mistakes to the inability to converse, the tools available on the market weren’t equipped to solve many of the needs of our community,” says Buckley.

“The image recognition offered by GPT-4 is superior, and the analytical and conversational layers powered by OpenAI increase value and utility exponentially.”

Be My Eyes previously supported users exclusively with human volunteers. According to OpenAI, the new feature can generate the same level of context and understanding. But if the user doesn’t get a good response or simply prefers a human connection, they can still call a volunteer.

Despite the promising early results, Buckley insists that the free service will be rolled out cautiously. The beta testers and wider community will play a central role in determining this process.

Ultimately, Buckley believes the platform will provide users with both support and opportunities. Be My Eyes will also soon help businesses to better serve their customers by prioritizing accessibility.

“It’s safe to say the technology could give people who are blind or have low vision not only more power, but also a platform for the community to share even more of their talents with the rest of the world,” says Buckley. “To me, that’s an incredibly compelling possibility.”

If you or someone you know is visually-impaired and wants to test the Virtual Volunteer, you can register for the waitlist here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.